Featured Checklist

Social Media Data Privacy and Security Audit Checklist

In an era where data is the new currency, social media platforms face unprecedented challenges in safeguarding user privacy and maintaining robust security measures. This comprehensive audit checklist is designed to evaluate and enhance data privacy practices and security protocols across social media platforms. By addressing key areas such as data collection, storage, usage, user consent, access controls, and incident response, this checklist helps platforms identify vulnerabilities, ensure compliance with global privacy regulations, and build user trust. Regular audits using this checklist can lead to improved data governance, enhanced security posture, and a stronger commitment to user privacy in the ever-evolving landscape of social media.

Social media platforms encompass a diverse range of services, from social networking sites and microblogging platforms to video-sharing applications and professional networking tools. These platforms have become integral to personal communication, business marketing, and information dissemination. The industry's rapid growth has led to increased scrutiny from regulators, advertisers, and users, necessitating robust auditing and compliance measures.

Auditing in the social media sector is essential for maintaining platform integrity, protecting user data, and ensuring compliance with evolving regulations. Regular audits help identify potential risks, assess the effectiveness of content moderation systems, and verify adherence to privacy standards. By implementing comprehensive audit processes, social media companies can enhance operational efficiency, build user trust, and mitigate legal and reputational risks.

The regulatory landscape for social media platforms is complex and ever-changing. Companies must navigate a web of national and international regulations governing data protection, content moderation, and online safety. Key regulatory frameworks include the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA) in the United States, and various country-specific laws addressing online content and user rights. Compliance with these regulations requires ongoing vigilance and regular audits to ensure adherence to legal requirements and industry best practices.

Auditing social media platforms requires a comprehensive approach that addresses the unique challenges of the digital ecosystem while adhering to universal audit principles. Key audit components include data privacy assessments, content moderation reviews, and security vulnerability checks. Best practices involve implementing robust audit trails, conducting regular penetration testing, and maintaining comprehensive documentation of platform policies and procedures.

Discover checklists built for social media platforms needs. Start improving efficiency today!

Discover AI Checklists

Data privacy audits are crucial for social media platforms, given the vast amounts of personal information they handle. These audits assess the platform's data collection, storage, and processing practices to ensure compliance with privacy regulations. Security audits evaluate the platform's defenses against cyber threats, including encryption protocols, access controls, and incident response procedures. Regular penetration testing helps identify potential vulnerabilities before they can be exploited by malicious actors.

Auditing content moderation processes is essential for maintaining platform integrity and user safety. These audits evaluate the effectiveness of automated filtering systems, human moderator performance, and the consistency of policy enforcement. They also assess the platform's ability to respond to emerging threats such as misinformation campaigns or coordinated inauthentic behavior. Best practices include regular reviews of moderation guidelines, ongoing training for moderators, and transparency reporting on content removal decisions.

User experience audits assess the platform's usability, accessibility, and overall user satisfaction. These audits evaluate factors such as interface design, load times, and feature functionality across different devices and operating systems. Accessibility audits ensure that the platform is usable by individuals with disabilities, complying with standards such as the Web Content Accessibility Guidelines (WCAG). Regular user feedback collection and analysis are integral to these audit processes.

Audits of advertising and monetization practices are critical for ensuring compliance with advertising standards and protecting users from deceptive or harmful content. These audits review ad placement algorithms, sponsored content disclosures, and the effectiveness of ad review processes. They also assess compliance with regulations governing targeted advertising and data usage for marketing purposes. Best practices include implementing clear guidelines for advertisers, regular reviews of ad content, and maintaining transparent records of sponsored partnerships.

Social media platforms face unique auditing and compliance challenges due to their global reach, rapid content generation, and evolving regulatory landscape. Addressing these challenges requires innovative solutions and adherence to industry best practices.

Social media platforms operate across multiple jurisdictions, each with its own set of regulations and compliance requirements. This complexity is further compounded by the rapid pace of regulatory changes in the digital space. To navigate this challenge, platforms must develop comprehensive compliance frameworks that are flexible enough to adapt to new regulations. Implementing centralized compliance management systems can help track and manage various regulatory requirements across different regions. Regular training sessions for compliance teams on emerging regulations and their implications for platform operations are essential. Platforms should also consider establishing dedicated regulatory affairs teams to monitor legislative developments and proactively adjust compliance strategies.

The dynamic nature of social media regulation means that platforms must constantly update their policies and practices to remain compliant. This requires a proactive approach to monitoring regulatory changes and assessing their impact on platform operations. Developing strong relationships with regulatory bodies and industry associations can provide valuable insights into upcoming changes. Implementing agile policy development processes allows platforms to quickly adapt to new requirements. Regular policy reviews and impact assessments help ensure that all aspects of the platform remain compliant with the latest regulations. Platforms should also invest in technology solutions that can automatically flag potential compliance issues arising from regulatory updates.

Maintaining comprehensive and up-to-date documentation is crucial for demonstrating compliance during audits. However, the sheer volume of data generated by social media platforms can make documentation management a significant challenge. To address this, platforms should implement robust document management systems that allow for easy storage, retrieval, and version control of compliance-related documents. Establishing clear documentation protocols and assigning responsibility for maintaining specific types of records can help ensure consistency. Regular audits of documentation processes can identify gaps and areas for improvement. Implementing automated documentation tools that capture and organize compliance-related data in real-time can significantly enhance efficiency and accuracy.

The human element in content moderation and policy enforcement introduces the risk of inconsistency and errors. Mitigating this challenge requires a multi-faceted approach. Comprehensive training programs for content moderators should be implemented, covering not only platform policies but also decision-making skills and bias awareness. Implementing quality assurance processes, such as random checks of moderation decisions, can help identify and correct errors. Developing clear escalation procedures for complex cases ensures that difficult decisions are reviewed by experienced team members. Leveraging artificial intelligence and machine learning tools to assist human moderators can improve consistency and reduce the likelihood of errors. Regular performance evaluations and feedback sessions for moderators can help identify areas for improvement and recognize exemplary work.

Conducting thorough audits and maintaining robust compliance programs require significant resources, which can be challenging for platforms of all sizes. To address this, platforms should prioritize their compliance efforts based on risk assessments, focusing resources on the most critical areas. Implementing automated compliance monitoring tools can help stretch limited resources by flagging potential issues for human review. Developing partnerships with external compliance experts and auditors can provide access to specialized knowledge without the need for full-time in-house staff. Platforms should also consider implementing a culture of compliance throughout the organization, making every employee responsible for identifying and reporting potential issues. This distributed approach can help alleviate the burden on dedicated compliance teams.

The audit standards and regulatory framework for social media platforms encompass a wide range of guidelines and requirements designed to ensure user safety, data protection, and platform integrity. These standards are crucial for maintaining trust and compliance in the rapidly evolving digital landscape.

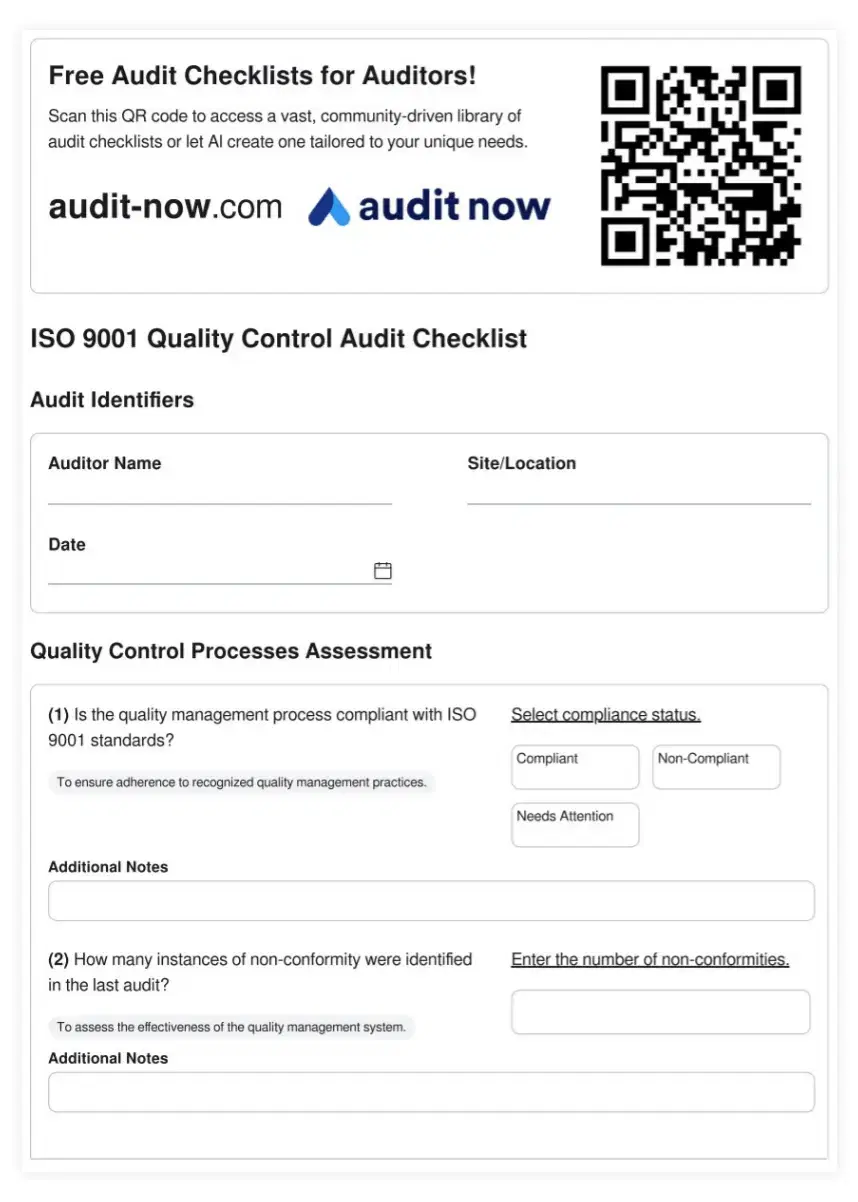

ISO standards provide a framework for quality management and information security that is applicable to social media platforms. ISO 27001, for instance, sets out the specifications for an information security management system (ISMS). This standard is particularly relevant for social media platforms, given the vast amounts of user data they handle. Implementing ISO 27001 involves conducting regular risk assessments, establishing security controls, and continuously monitoring and improving the ISMS. Another relevant standard is ISO 9001, which focuses on quality management systems. For social media platforms, this can translate to ensuring consistent user experience, reliable service delivery, and effective process management. Adherence to these ISO standards demonstrates a commitment to best practices in data protection and operational excellence, which can enhance user trust and regulatory compliance.

Data protection regulations form a critical part of the audit framework for social media platforms. The General Data Protection Regulation (GDPR) in the European Union sets a high bar for data protection and privacy. It mandates strict controls over data collection, processing, and storage, as well as giving users significant rights over their personal data. Compliance with GDPR requires regular audits of data handling practices, clear consent mechanisms, and robust data breach notification procedures. In the United States, the California Consumer Privacy Act (CCPA) imposes similar requirements, with a focus on transparency in data collection and giving users control over their personal information. Social media platforms must conduct regular audits to ensure compliance with these and other regional data protection laws, which may include:

Content moderation is a critical area for social media platform audits, with standards evolving to address issues such as hate speech, misinformation, and harmful content. While there is no single global standard for content moderation, platforms are increasingly expected to adhere to principles of transparency, consistency, and respect for freedom of expression. The European Union's Digital Services Act (DSA) sets forth requirements for content moderation practices, including regular risk assessments and transparency reporting. In the United States, Section 230 of the Communications Decency Act provides a framework for platform liability, but also places expectations on platforms to moderate content responsibly. Audit standards in this area typically focus on:

Given the critical role of social media platforms in modern communication, cybersecurity and infrastructure security are paramount concerns. The National Institute of Standards and Technology (NIST) Cybersecurity Framework provides a comprehensive set of guidelines for managing and reducing cybersecurity risk. This framework is widely recognized and can be adapted for social media platforms. Regular security audits should assess the platform's defenses against various cyber threats, including data breaches, DDoS attacks, and account hijacking. These audits typically involve penetration testing, vulnerability assessments, and reviews of incident response plans. Additionally, platforms must comply with industry-specific security standards, such as the Payment Card Industry Data Security Standard (PCI DSS) if they handle financial transactions.

In the rapidly evolving landscape of social media platforms, effective audit solutions are essential for maintaining compliance, protecting user data, and ensuring platform integrity. Various approaches and tools are available in the market, each offering unique features to address the complex audit requirements of social media companies. When selecting an audit management solution, key features to consider include real-time monitoring capabilities, customizable audit templates, automated reporting functions, and integration with existing platform systems. The ability to conduct cross-functional audits and track compliance across multiple regulatory frameworks is also crucial. Some solutions offer advanced analytics and machine learning capabilities to identify patterns and potential risks in user behavior and content moderation decisions. Others focus on streamlining the documentation process, providing robust version control and audit trail features. For organizations seeking a comprehensive audit solution tailored to the unique challenges of social media platforms, Audit Now offers a range of tools and services designed to enhance compliance and operational efficiency.

Digital Marketing Campaign Audit Checklist

Marketing Technology Stack Audit Checklist

Influencer Marketing Campaign Audit Checklist

Social Media Influencer Campaign Audit Checklist

Programmatic Advertising Audit Checklist

Social Media Content Moderation Audit